Facebook and Instagram parent Meta has just rolled out new privacy updates for everyone under the age of 16, or 18 in some countries.

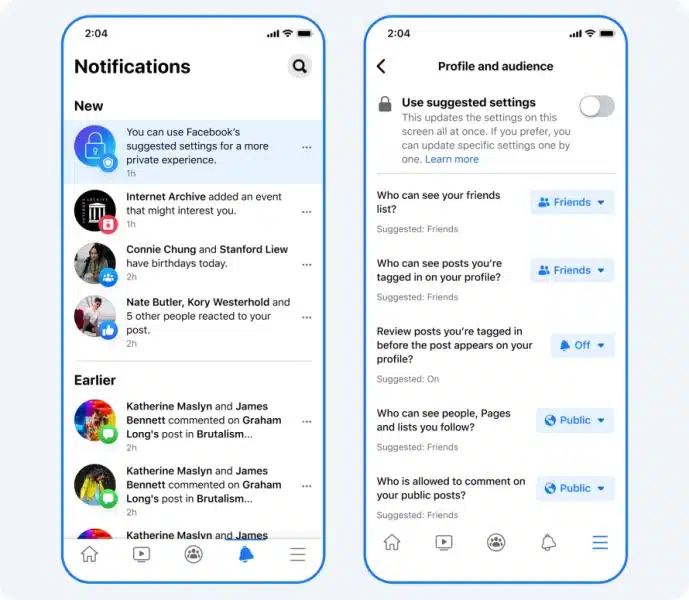

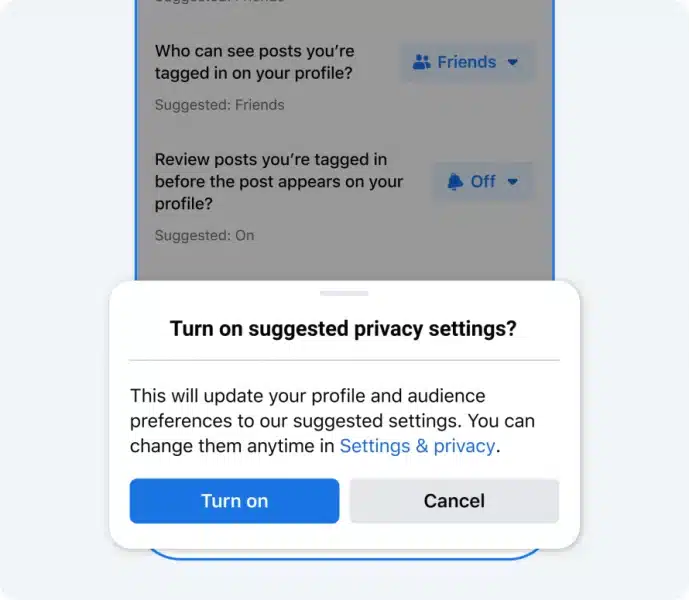

New privacy defaults. Starting today, teens will default to more private settings when they join Facebook. For teens already on the platform, Meta recommends making these changes manually. The new privacy settings affect:

- Who can see their friends list

- Who can see the people, Pages and lists they follow

- Who can see posts they’re tagged in on their profile

- Reviewing posts they’re tagged in before the post appears on their profile

- Who is allowed to comment on their public posts

Restricting connections. Meta is testing ways to protect teens from messaging suspicious adults they aren’t connected to, and those adults won’t be shown in teens’ People You May Know recommendations. Meta further clarifies that a “suspicious” account is one that belongs to an adult that may have recently been blocked or reported by a young person, for example. As an added layer of protection, Meta is also testing removing the message button on teens’ Instagram accounts when they’re viewed by suspicious adults altogether.

Get the daily newsletter search marketers rely on.

New safety tools. Meta is also developing new tools to report anything that makes them feel uncomfortable. On their blog, Meta says, “we’re prompting teens to report accounts to us after they block someone, and sending them safety notices with information on how to navigate inappropriate messages from adults. In just one month in 2021, more than 100 million people saw safety notices on Messenger. We’ve also made it easier for people to find our reporting tools and, as a result, we saw more than a 70% increase in reports sent to us by minors in Q1 2022 versus the previous quarter on Messenger and Instagram DMs.”

Stopping the spread of sensitive images. Meta is also working on new tools to help stop the spread of teens’ intimate images online. Meta says:

We’re working with the National Center for Missing and Exploited Children (NCMEC) to build a global platform for teens who are worried intimate images they created might be shared on public online platforms without their consent. This platform will be similar to work we have done to prevent the non-consensual sharing of intimate images for adults. It will allow us to help prevent a teen’s intimate images from being posted online and can be used by other companies across the tech industry. We’ve been working closely with NCMEC, experts, academics, parents and victim advocates globally to help develop the platform and ensure it responds to the needs of teens so they can regain control of their content in these horrific situations. We’ll have more to share on this new resource in the coming weeks.

We’re also working with Thorn and their NoFiltr brand to create educational materials that reduce the shame and stigma surrounding intimate images, and empower teens to seek help and take back control if they’ve shared them or are experiencing sextortion.

Dig deeper. Meta says that anyone seeking support and information related to sextortion can visit their education and awareness resources, including the Stop Sextortion hub on the Facebook Safety Center. You can also read this announcement from Meta on their blog.

Why we care. It’s hard to criticize Meta for taking steps to protect and prevent harm to teens. Though teens will default to the new settings once they sign up, they can still opt out if they choose. And teens already on the platform will have to manually select the new options, which many of them may not do.

At least parents of teens can now be aware of the new changes and take the appropriate steps to help protect them.

New on Search Engine Land

Comments are closed.