YouTube will now ask users to reconsider posting offensive comments

YouTube is easily one of the largest platforms for video content platforms on the internet and it is very easy to get buried among the mountain of video uploaded every second. In an effort to help creators from all backgrounds, including those from minorities, YouTube is introducing a few updates to the platform such as a confirmation before sending hate comments and more.

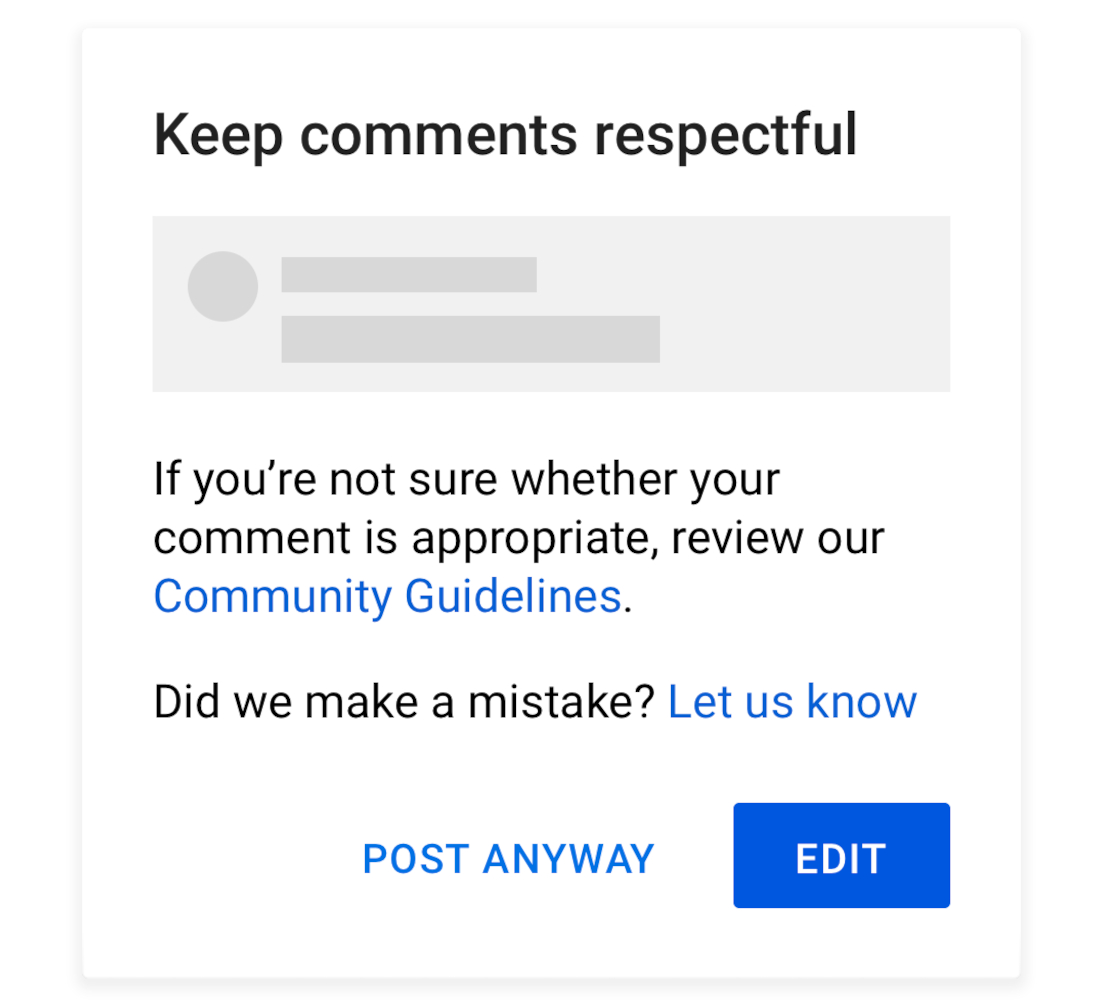

YouTube Comments has been a significant area where a lot of hateful and harmful messages are shared. While creators have some control over comments, a lot of it is still very difficult to regulate. So YouTube is introducing a feature where, if a message goes against YouTube’s community guidelines, the commenter will be prompted to confirm his message before posting it. It’s a small step, but it could make a difference.

From YouTube’s side, they are testing new filters in the YouTube Studio that will be better equipped to detect and identify these types of comments and automatically hold them for review without the creator even being involved.

YouTube is also trying to ensure fairness is maintained for videos from these communities by trying to identify who the creator is. Till date, YouTube can very clearly identify what is in the video, but not who uploaded it, more specifically which community does the uploader belong to. To solve this, they are rolling out a voluntary opt-in survey where uploaders can indicate gender, sexual orientation, race and ethnicity.

With this information, YouTube wants to better understand the video statistics for creators in these marginalized communities and hope to build better tools to uplift them. As usual, YouTube will not use this data for advertising purposes, and creators will be able to delete their data anytime.

Comments are closed.